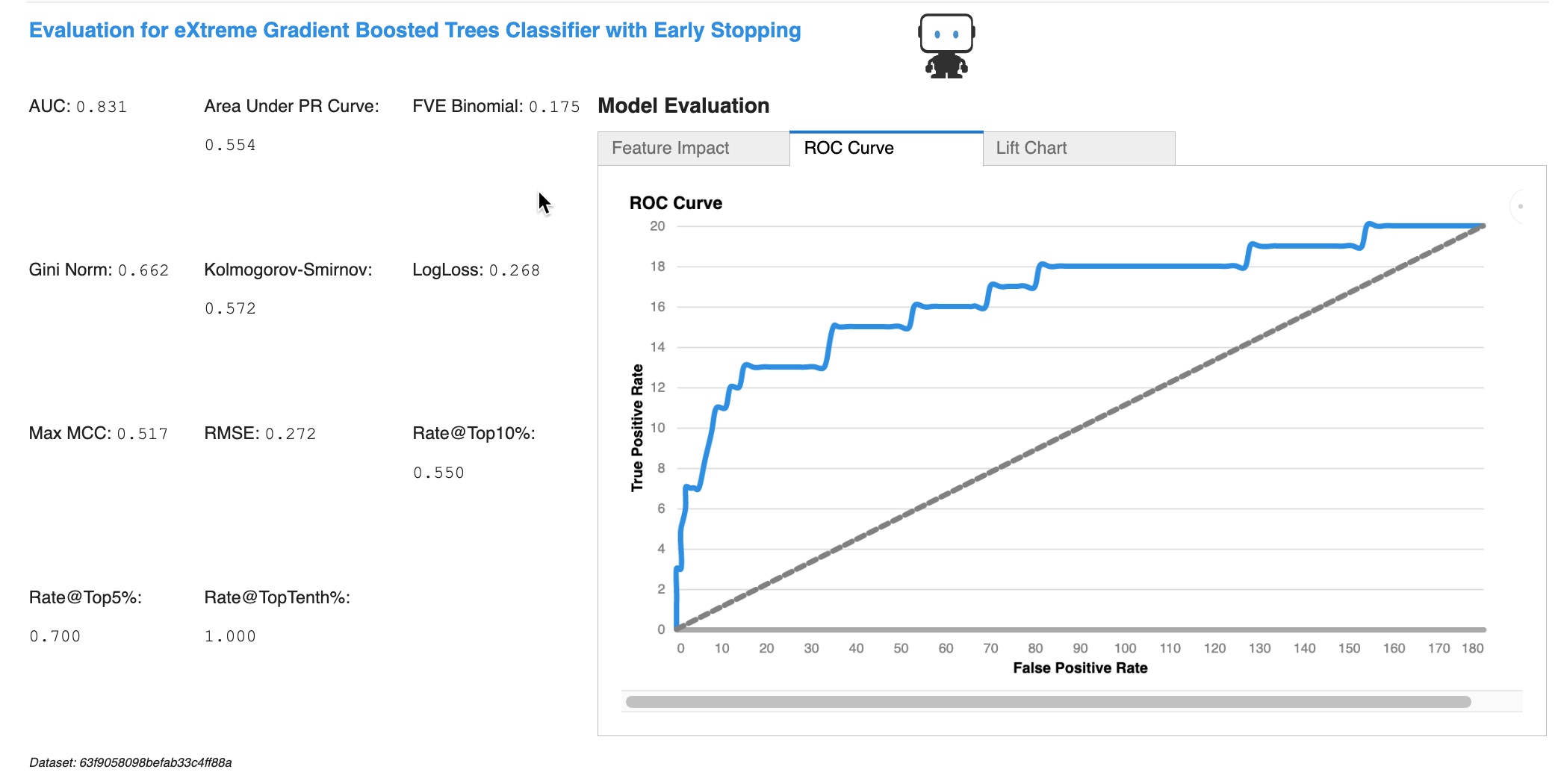

Evaluation#

Evaluate a models performance, optionally using an external test dataset.

What is an “external test” dataset?

When we refer to an “external test” or “external data”, we are referring to a set of data that was not used to either train the model or evaluate the model relative to others (e.g. within a DataRobot project). An external holdout dataset can help in assessing how a model might perform on new data.

Evaluate in-notebook using drx#

In notebook environments, users can access measures of model performance and feature

importance by calling evaluate on a drx model.

import datarobotx as drx

drx.evaluate(model)

Users can also assess the performance of a model on an external test dataset. Presently, when using an external dataset a few additional plots are available depending on the target type.

from datarobotx import evaluate

evaluate(model, evaluation_data=external_test_data_in_dataframe)

Evaluate using other libraries#

Where possible, drx models implement the predict and predict_proba methods in

a manner that aligns with scikit-learn. This enables drx models to interoperate with

scikit-learn and other libraries that expect this interface, sometimes without modification.

from sklearn.metrics import confusion_matrix

pd.DataFrame(confusion_matrix(test_df['is_bad'], model.predict(test_df)))

0 |

1 |

|

|---|---|---|

0 |

180 |

0 |

1 |

9 |

11 |

API Reference#

|

Show evaluation metrics and plots for a model |